Introduction

The operation of this site construction was completed last December, and I’m finally able to resume full-scale activities this year. However, I was too busy in January and February to work properly, and that’s why my first post is delayed until March. The first task in this year is to revise the blend recipe for the learning model (checkpoint=ckpt) to reflect my changed environment.

“my changed environment” has to do with last year’s misfortunes. Last October, my Pixiv account with about 10,000 followers was frozen, and I had to hastily build my personal website to escape.The curse of the “Pixiv Terms of Use” is gone. Until now, I had to put up with Pixiv’s terms of use to avoid having AI output too realistic, but now that I have been kicked out from PIxiv, there are no such restrictions any more. From now on, I can generate as many photorealistic pregnant girl-children as I like and masturbate with those pregnant-waifu-children without any hesitation. It’s a lucky failure.

In order to bring the style closer to photorealistic, I will create a new recipe for ckpt blending. I will also take this opportunity to learn and practice Merge-Block-Weights (MBW), as I have only used simple-merge so far.

Stuffs to Use

Checkpoints

I selected 3 ckpts that looked good as a result of my exploring in CivitAI. These 3 ckpts will be MBW-merged to formulate my expressive intent I’m seeking.

CivitAI : https://civitai.com

- Real Moon (ver 10.0) : https://civitai.com/models/67192/real-moon

- Beautiful Realistic Asians (ver 7.0) : https://civitai.com/models/25494/beautiful-realistic-asians

- AgainMix (ver 1.8) : https://civitai.com/models/167100/againmix

The work process is to merge BeautifulRealisticAsians and AgainMix based on RealMoon to create a photorealistic ckpt that can generate a cute&nude Japanese elementary-schoolgirl. By the way, the latest version of AgainMix is ver1.9. However, that model was updated while I was writing this article, so I actually used the previous version, ver1.8.

SuperMerger

I’ll use SuperMerger, an extension of AUTOMATIC1111, to execute Merge-Block-Weights and to adjust weights for each U-Net layer.

sd-webui-supermerger : https://github.com/hako-mikan/sd-webui-supermerger

I don’t think an explanation of how to install extensions is necessary, so I’ll skip that part. Also if I get too involved with MBW, I’ll end up in a bottomless swamp, so limit myself to basic usage.

The best feature of SuperMerger is that it can try txt2img with the temporarily-merged-model stored in RAM. This is useful for extending the life of SSDs, since image generation can be tried without having to write the merged-model to SSDs every time. By the way, I started using StableDiffusion in August 2022, and it has consumed 30% of my SSD life in a year and a few months so far. Also the time efficiency will be greatly improved. I personally feel that it is about 1/10th of the time required for the simple-merge method, which is a world away from the period when I was repeating the simple-merges based on WaifuDiffusion 1.2 in August-September of 2022.

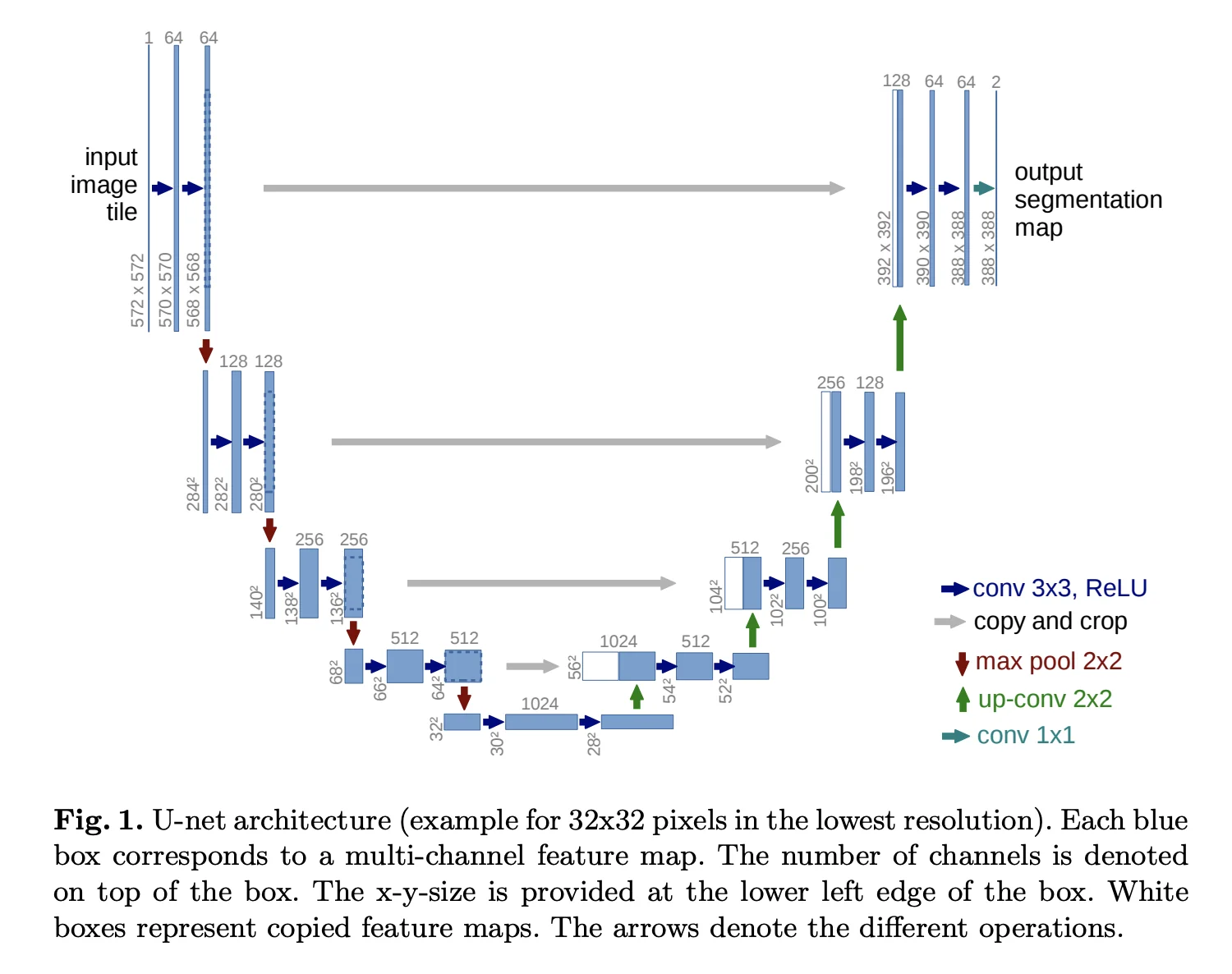

The difference between simple-merge and MBW is in the degree of interference with U-Net, which is apparently a type of convolutional neural network (CNN) frequently used in the field of deep learning. I have completely no idea.

In StableDiffusion, U-Net is used as a noise diffusion model. As you know, image generation in StableDiffusion is done by repeating noise elimination steps, and the core of this noise control is U-Net. What we usually call “ckpt (checkpoint)” or “learning model” should be more strictly called U-Net model, right? I didn’t know that, U-Net-chan! By the way, the U-Net-chan’s structure diagram is so cute, like a clitoris.

The U-Net in StableDiffusion is divided into 25 layers: 12 layers on the input side, 12 layers on the output side, and 1 layer in the middle. MBW merges multiple models into one by individually setting the weights for each of these 26 layers, plus 1 layer of base alpha. The simple-merge, on the other hand, sets only base-alpha. It is very hard to adjust 25 more parameters when it was hard even for a simple-merge to change only the base-alpha. Moreover, the layers of the U-Net interfere with each other, and it is not clear which layer affects which part of the final generated image and how.

| BASE | IN | MID | OUT |

|---|---|---|---|

| BASE | IN00 IN01 IN02 IN03 IN04 IN05 IN06 IN07 IN08 IN09 IN10 IN11 | MID | OUT00 OUT01 OUT02 OUT03 OUT04 OUT05 OUT06 OUT07 OUT08 OUT09 OUT10 OUT11 |

It would be insane to measure the impact of the above 26 parameters by setting them numerically in detail. Therefore, I’ll execute MBW using the presets that have been prepared in SuperMerger.

The following 42 presets are available in SuperMerger for MBW.

| Preset Name | Weights for each U-Net layer |

|---|---|

| GRAD_V | 0,1,0.9166666667,0.8333333333,0.75,0.6666666667,0.5833333333,0.5,0.4166666667,0.3333333333,0.25,0.1666666667,0.0833333333,0,0.0833333333,0.1666666667,0.25,0.3333333333,0.4166666667,0.5,0.5833333333,0.6666666667,0.75,0.8333333333,0.9166666667,1.0 |

| GRAD_A | 0,0,0.0833333333,0.1666666667,0.25,0.3333333333,0.4166666667,0.5,0.5833333333,0.6666666667,0.75,0.8333333333,0.9166666667,1.0,0.9166666667,0.8333333333,0.75,0.6666666667,0.5833333333,0.5,0.4166666667,0.3333333333,0.25,0.1666666667,0.0833333333,0 |

| FLAT_25 | 0,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25 |

| FLAT_50 | 0,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5 |

| FLAT_75 | 0,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75 |

| WRAP08 | 0,1,1,1,1,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,1,1,1,1 |

| WRAP12 | 0,1,1,1,1,1,1,0,0,0,0,0,0,0,0,0,0,0,0,0,1,1,1,1,1,1 |

| WRAP14 | 0,1,1,1,1,1,1,1,0,0,0,0,0,0,0,0,0,0,0,1,1,1,1,1,1,1 |

| WRAP16 | 0,1,1,1,1,1,1,1,1,0,0,0,0,0,0,0,0,0,1,1,1,1,1,1,1,1 |

| MID12_50 | 0,0,0,0,0,0,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0,0,0,0,0,0 |

| OUT07 | 0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,1,1,1,1,1,1,1 |

| OUT12 | 0,0,0,0,0,0,0,0,0,0,0,0,0,0,1,1,1,1,1,1,1,1,1,1,1,1 |

| OUT12_5 | 0,0,0,0,0,0,0,0,0,0,0,0,0,0.5,1,1,1,1,1,1,1,1,1,1,1,1 |

| RING08_SOFT | 0,0,0,0,0,0,0.5,1,1,1,0.5,0,0,0,0,0,0.5,1,1,1,0.5,0,0,0,0,0 |

| RING08_5 | 0,0,0,0,0,0,0,1,1,1,1,0,0,0,0,0,1,1,1,1,0,0,0,0,0,0 |

| RING10_5 | 0,0,0,0,0,0,1,1,1,1,1,0,0,0,0,0,1,1,1,1,1,0,0,0,0,0 |

| RING10_3 | 0,0,0,0,0,0,0,1,1,1,1,1,0,0,0,1,1,1,1,1,0,0,0,0,0,0 |

| SMOOTHSTEP | 0,0,0.00506365740740741,0.0196759259259259,0.04296875,0.0740740740740741,0.112123842592593,0.15625,0.205584490740741,0.259259259259259,0.31640625,0.376157407407407,0.437644675925926,0.5,0.562355324074074,0.623842592592592,0.68359375,0.740740740740741,0.794415509259259,0.84375,0.887876157407408,0.925925925925926,0.95703125,0.980324074074074,0.994936342592593,1 |

| REVERSE-SMOOTHSTEP | 0,1,0.994936342592593,0.980324074074074,0.95703125,0.925925925925926,0.887876157407407,0.84375,0.794415509259259,0.740740740740741,0.68359375,0.623842592592593,0.562355324074074,0.5,0.437644675925926,0.376157407407408,0.31640625,0.259259259259259,0.205584490740741,0.15625,0.112123842592592,0.0740740740740742,0.0429687499999996,0.0196759259259258,0.00506365740740744,0 |

| SMOOTHSTEP*2 | 0,0,0.0101273148148148,0.0393518518518519,0.0859375,0.148148148148148,0.224247685185185,0.3125,0.411168981481482,0.518518518518519,0.6328125,0.752314814814815,0.875289351851852,1.,0.875289351851852,0.752314814814815,0.6328125,0.518518518518519,0.411168981481481,0.3125,0.224247685185184,0.148148148148148,0.0859375,0.0393518518518512,0.0101273148148153,0 |

| R_SMOOTHSTEP*2 | 0,1,0.989872685185185,0.960648148148148,0.9140625,0.851851851851852,0.775752314814815,0.6875,0.588831018518519,0.481481481481481,0.3671875,0.247685185185185,0.124710648148148,0.,0.124710648148148,0.247685185185185,0.3671875,0.481481481481481,0.588831018518519,0.6875,0.775752314814816,0.851851851851852,0.9140625,0.960648148148149,0.989872685185185,1 |

| SMOOTHSTEP*3 | 0,0,0.0151909722222222,0.0590277777777778,0.12890625,0.222222222222222,0.336371527777778,0.46875,0.616753472222222,0.777777777777778,0.94921875,0.871527777777778,0.687065972222222,0.5,0.312934027777778,0.128472222222222,0.0507812500000004,0.222222222222222,0.383246527777778,0.53125,0.663628472222223,0.777777777777778,0.87109375,0.940972222222222,0.984809027777777,1 |

| R_SMOOTHSTEP*3 | 0,1,0.984809027777778,0.940972222222222,0.87109375,0.777777777777778,0.663628472222222,0.53125,0.383246527777778,0.222222222222222,0.05078125,0.128472222222222,0.312934027777778,0.5,0.687065972222222,0.871527777777778,0.94921875,0.777777777777778,0.616753472222222,0.46875,0.336371527777777,0.222222222222222,0.12890625,0.0590277777777777,0.0151909722222232,0 |

| SMOOTHSTEP*4 | 0,0,0.0202546296296296,0.0787037037037037,0.171875,0.296296296296296,0.44849537037037,0.625,0.822337962962963,0.962962962962963,0.734375,0.49537037037037,0.249421296296296,0.,0.249421296296296,0.495370370370371,0.734375000000001,0.962962962962963,0.822337962962962,0.625,0.448495370370369,0.296296296296297,0.171875,0.0787037037037024,0.0202546296296307,0 |

| R_SMOOTHSTEP*4 | 0,1,0.97974537037037,0.921296296296296,0.828125,0.703703703703704,0.55150462962963,0.375,0.177662037037037,0.0370370370370372,0.265625,0.50462962962963,0.750578703703704,1.,0.750578703703704,0.504629629629629,0.265624999999999,0.0370370370370372,0.177662037037038,0.375,0.551504629629631,0.703703703703703,0.828125,0.921296296296298,0.979745370370369,1 |

| SMOOTHSTEP/2 | 0,0,0.0196759259259259,0.0740740740740741,0.15625,0.259259259259259,0.376157407407407,0.5,0.623842592592593,0.740740740740741,0.84375,0.925925925925926,0.980324074074074,1.,0.980324074074074,0.925925925925926,0.84375,0.740740740740741,0.623842592592593,0.5,0.376157407407407,0.259259259259259,0.15625,0.0740740740740741,0.0196759259259259,0 |

| R_SMOOTHSTEP/2 | 0,1,0.980324074074074,0.925925925925926,0.84375,0.740740740740741,0.623842592592593,0.5,0.376157407407407,0.259259259259259,0.15625,0.0740740740740742,0.0196759259259256,0.,0.0196759259259256,0.0740740740740742,0.15625,0.259259259259259,0.376157407407407,0.5,0.623842592592593,0.740740740740741,0.84375,0.925925925925926,0.980324074074074,1 |

| SMOOTHSTEP/3 | 0,0,0.04296875,0.15625,0.31640625,0.5,0.68359375,0.84375,0.95703125,1.,0.95703125,0.84375,0.68359375,0.5,0.31640625,0.15625,0.04296875,0.,0.04296875,0.15625,0.31640625,0.5,0.68359375,0.84375,0.95703125,1 |

| R_SMOOTHSTEP/3 | 0,1,0.95703125,0.84375,0.68359375,0.5,0.31640625,0.15625,0.04296875,0.,0.04296875,0.15625,0.31640625,0.5,0.68359375,0.84375,0.95703125,1.,0.95703125,0.84375,0.68359375,0.5,0.31640625,0.15625,0.04296875,0 |

| SMOOTHSTEP/4 | 0,0,0.0740740740740741,0.259259259259259,0.5,0.740740740740741,0.925925925925926,1.,0.925925925925926,0.740740740740741,0.5,0.259259259259259,0.0740740740740741,0.,0.0740740740740741,0.259259259259259,0.5,0.740740740740741,0.925925925925926,1.,0.925925925925926,0.740740740740741,0.5,0.259259259259259,0.0740740740740741,0 |

| R_SMOOTHSTEP/4 | 0,1,0.925925925925926,0.740740740740741,0.5,0.259259259259259,0.0740740740740742,0.,0.0740740740740742,0.259259259259259,0.5,0.740740740740741,0.925925925925926,1.,0.925925925925926,0.740740740740741,0.5,0.259259259259259,0.0740740740740742,0.,0.0740740740740742,0.259259259259259,0.5,0.740740740740741,0.925925925925926,1 |

| COSINE | 0,1,0.995722430686905,0.982962913144534,0.961939766255643,0.933012701892219,0.896676670145617,0.853553390593274,0.80438071450436,0.75,0.691341716182545,0.62940952255126,0.565263096110026,0.5,0.434736903889974,0.37059047744874,0.308658283817455,0.25,0.195619285495639,0.146446609406726,0.103323329854382,0.0669872981077805,0.0380602337443566,0.0170370868554658,0.00427756931309475,0 |

| REVERSE_COSINE | 0,0,0.00427756931309475,0.0170370868554659,0.0380602337443566,0.0669872981077808,0.103323329854383,0.146446609406726,0.19561928549564,0.25,0.308658283817455,0.37059047744874,0.434736903889974,0.5,0.565263096110026,0.62940952255126,0.691341716182545,0.75,0.804380714504361,0.853553390593274,0.896676670145618,0.933012701892219,0.961939766255643,0.982962913144534,0.995722430686905,1 |

| TRUE_CUBIC_HERMITE | 0,0,0.199031876929012,0.325761959876543,0.424641927083333,0.498456790123457,0.549991560570988,0.58203125,0.597360869984568,0.598765432098765,0.589029947916667,0.570939429012346,0.547278886959876,0.520833333333333,0.49438777970679,0.470727237654321,0.45263671875,0.442901234567901,0.444305796682099,0.459635416666667,0.491675106095678,0.543209876543211,0.617024739583333,0.715904706790124,0.842634789737655,1 |

| TRUE_REVERSE_CUBIC_HERMITE | 0,1,0.800968123070988,0.674238040123457,0.575358072916667,0.501543209876543,0.450008439429012,0.41796875,0.402639130015432,0.401234567901235,0.410970052083333,0.429060570987654,0.452721113040124,0.479166666666667,0.50561222029321,0.529272762345679,0.54736328125,0.557098765432099,0.555694203317901,0.540364583333333,0.508324893904322,0.456790123456789,0.382975260416667,0.284095293209876,0.157365210262345,0 |

| FAKE_CUBIC_HERMITE | 0,0,0.157576195987654,0.28491512345679,0.384765625,0.459876543209877,0.512996720679012,0.546875,0.564260223765432,0.567901234567901,0.560546875,0.544945987654321,0.523847415123457,0.5,0.476152584876543,0.455054012345679,0.439453125,0.432098765432099,0.435739776234568,0.453125,0.487003279320987,0.540123456790124,0.615234375,0.71508487654321,0.842423804012347,1 |

| FAKE_REVERSE_CUBIC_HERMITE | 0,1,0.842423804012346,0.71508487654321,0.615234375,0.540123456790123,0.487003279320988,0.453125,0.435739776234568,0.432098765432099,0.439453125,0.455054012345679,0.476152584876543,0.5,0.523847415123457,0.544945987654321,0.560546875,0.567901234567901,0.564260223765432,0.546875,0.512996720679013,0.459876543209876,0.384765625,0.28491512345679,0.157576195987653,0 |

| ALL_A | 0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0 |

| ALL_B | 1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1 |

| ALL_R | R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R |

| ALL_U | U,U,U,U,U,U,U,U,U,U,U,U,U,U,U,U,U,U,U,U,U,U,U,U,U,U |

| ALL_X | X,X,X,X,X,X,X,X,X,X,X,X,X,X,X,X,X,X,X,X,X,X,X,X,X,X |

Of the above presets, A, B, C, D, and E threw an error and did not work, so I’ll try txt2img with a temporarily merged model with 37 presets that omits these 5 presets.

Execute Merge-Block-Weights!

Let’s make Grid-Images for comparison

Now I’ll execute txt2img trial by temporary merge. Since it is difficult to compare images if only each image is generated individually, the script XYZ-Plot, familiar to AUTOMATIC1111 users, is used to create a grid-image for comparison. Also, run XYZ-Plot several times, since the grid-image will be too long to view if all the presets are tried at once. The actual operation screen on the WebUI is shown below.

At first, RealMoon and BeautifulRealisticAsians are temporarily merged and compared for each preset. The actual generated grid-image for comparison is shown below. Be careful, there are a lot of images and each one is ridiculously large. It was a fucking pain in the ass to mosaic a large number of pussies.

I thought MID12_50 would be good because of the good feeling of her face and body, and also because of the low level of breakdown in her fingers and body. So I’ll execute MBW-merging RealMoon and BeautifulRealisticAsians with MID12_50 preset values:[0,0,0,0,0,0,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0,0,0,0,0,0].

Name this MBW-merged ckpt. I named it [realMoon_v10+(MID12_50)beautifulRealistic_v7.safetensors] for accuracy and clarity. It’s long, but it can’t be helped.

Continue with AgainMix MBW attempt. As before, I’ll create a grid-image with XYZ-Plot and compare all presets. Be careful because there are a lot of images and they are ridiculously large.

RealMoon has a trait of excessively yellow skin when it generates nude Asians/Yellows. Since Caucasians are the main source of learning, this ckpt may have emphasized other racial traits. BeautifulRealisticAsians is a ckpt that specializes in Asians, but it too has a habit of making the entire screen look yellowish …or brownish. AgainMix, on the other hand, uses K-POP idols and Chinese/Korean actresses as its main source of learning, so its makeup is heavy and, contrary to RealMoon, it tends to over-white Asians yellow skin (and background). I thought that if these 3 models were multiplied and kept in the middle, it would be possible to generate natural Japanese girl-children with just the right skin tone, which is why I adopted AgainMix.

The basic composition and balance of the human body is completed with [realMoon_v10+(MID12_50)beautifulRealistic_v7.safetensors], which was blended already. So I thought about which preset would make the face and skin color look like a Japanese elementary schoolgirl in neighborhood, and concluded that R_SMOOTHSTEP/2 would be appropriate. So I’ll execute a MBW-merge with the preset values of R_SMOOTHSTEP/2.

Then I tried to name this MBW-merged ckpt [realMoon_v10+(MID12_50)beautifulRealistic_v7+(R_SMST/2)againmix_v18.safetensors]… I got an error. I guessed that the /(slash) in (R_SMST/2) might have been mistaken by the system for a code indicating the hierarchy of Folders/Directories, so I changed it to [realMoon_v10+(MID12_50)beautifulRealistic_v7+(R_SMSTslash2)againmix_v18.safetensors] and the MBW-merge completed without any error. I think I might be getting clear-headed. To write it in an Agatha Christie-esque way, “It is the brain, the little Loli-Con gray cells on which one must rely. “

Now using the resulting MBW-merged model [realMoon_v10+(MID12_50)beautifulRealistic_v7+(R_SMSTslash2)againmix_v18.safetensors], I generated a trial girl-child image. And the finalized photorealistic pregnant girl-child-chan is shown below.

The above is a MBW-merge using SuperMerger to blend a ckpt for the photorealistic pregnant girl-children.It is truly wonderful that AI can legally produce such realistic pseudo child-pornography. And StableDiffusion is completely the wisdom of mankind because it can satisfy our pedophile-cocks without harming real children. I hope that AI will continue to make progress and save all Hentai-Human-beings in this world.

March 04, 2024 お風呂でオシッコマン (in JP) = Pissman in a Bathtub (in EN)